Please find below patch to add PREEMPT_RT_FULL support on Arm64 based system with base kernel as 3.18.9-rt5 kernel

3) 64k page support for 32bit apps.

From 983e3630e3058b2deac4be17f3b8ee9dc6769f41 Mon Sep 17 00:00:00 2001

From: Ayyappa Ch <ayyappa.chandolu@amd.com>

Date: Tue, 18 Apr 2015 12:33:28 +0530

Subject: [PATCH] RT patch for AMD platform on top of 3.18.9-rt5 RT Kernel

---

arch/arm64/Kconfig | 7 +-

arch/arm64/Makefile | 2 +-

arch/arm64/include/asm/cmpxchg.h | 2 +

arch/arm64/include/asm/thread_info.h | 3 +

arch/arm64/include/asm/unistd.h | 8 ++-

arch/arm64/include/asm/unistd32.h | 2 +-

arch/arm64/kernel/Makefile | 2 +-

arch/arm64/kernel/asm-offsets.c | 1 +

arch/arm64/kernel/entry.S | 18 ++++-

arch/arm64/kernel/entry32.S | 123 +++++++++++++++++++++++++++++++++++

arch/arm64/kernel/head.S | 2 +-

arch/arm64/kernel/process.c | 41 ++++++++++++

arch/arm64/kernel/sys.c | 4 +-

arch/arm64/kernel/sys32.S | 115 --------------------------------

arch/arm64/kernel/sys32.c | 57 ++++++++++++++++

arch/arm64/mm/fault.c | 4 +-

include/linux/compat.h | 12 ++++

include/linux/syscalls.h | 8 ++-

18 files changed, 280 insertions(+), 131 deletions(-)

create mode 100644 arch/arm64/kernel/entry32.S

delete mode 100644 arch/arm64/kernel/sys32.S

create mode 100644 arch/arm64/kernel/sys32.c

diff --git a/arch/arm64/Kconfig b/arch/arm64/Kconfig

index 9532f8d..765b1e2 100644

--- a/arch/arm64/Kconfig

+++ b/arch/arm64/Kconfig

@@ -58,9 +58,11 @@ config ARM64

select HAVE_PERF_EVENTS

select HAVE_PERF_REGS

select HAVE_PERF_USER_STACK_DUMP

+

select HAVE_PREEMPT_LAZY

select HAVE_RCU_TABLE_FREE

select HAVE_SYSCALL_TRACEPOINTS

select IRQ_DOMAIN

+

select IRQ_FORCED_THREADING

select MODULES_USE_ELF_RELA

select NO_BOOTMEM

select OF

@@ -217,6 +219,7 @@ endchoice

choice

prompt "Virtual address space size"

default ARM64_VA_BITS_39 if ARM64_4K_PAGES

+

default ARM64_VA_BITS_48 if ARM64_4K_PAGES

default ARM64_VA_BITS_42 if ARM64_64K_PAGES

help

Allows choosing one of multiple possible virtual address

@@ -233,7 +236,7 @@ config ARM64_VA_BITS_42

config ARM64_VA_BITS_48

bool "48-bit"

-

depends on !ARM_SMMU

+

depends on ARM64_4K_PAGES

endchoice

@@ -298,6 +301,7 @@ config HOTPLUG_CPU

Say Y here to experiment with turning CPUs off and on. CPUs

can be controlled through /sys/devices/system/cpu.

+

source kernel/Kconfig.preempt

config HZ

@@ -409,7 +413,6 @@ source "fs/Kconfig.binfmt"

config COMPAT

bool "Kernel support for 32-bit EL0"

-

depends on !ARM64_64K_PAGES

select COMPAT_BINFMT_ELF

select HAVE_UID16

select OLD_SIGSUSPEND3

diff --git a/arch/arm64/Makefile b/arch/arm64/Makefile

index 20901ff..2984d9c 100644

--- a/arch/arm64/Makefile

+++ b/arch/arm64/Makefile

@@ -39,7 +39,7 @@ head-y

:= arch/arm64/kernel/head.o

ifeq ($(CONFIG_ARM64_RANDOMIZE_TEXT_OFFSET), y)

TEXT_OFFSET := $(shell awk 'BEGIN {srand(); printf "0x%03x000\n", int(512 * rand())}')

else

-TEXT_OFFSET := 0x00080000

+TEXT_OFFSET := 0x0080000

endif

export

TEXT_OFFSET GZFLAGS

diff --git a/arch/arm64/include/asm/cmpxchg.h b/arch/arm64/include/asm/cmpxchg.h

index ddb9d78..2a64433 100644

--- a/arch/arm64/include/asm/cmpxchg.h

+++ b/arch/arm64/include/asm/cmpxchg.h

@@ -79,6 +79,8 @@ static inline unsigned long __xchg(unsigned long x, volatile void *ptr, int size

__ret; \

})

+#define __HAVE_ARCH_CMPXCHG 1

+

static inline unsigned long __cmpxchg(volatile void *ptr, unsigned long old,

unsigned long new, int size)

{

diff --git a/arch/arm64/include/asm/thread_info.h b/arch/arm64/include/asm/thread_info.h

index 459bf8e..8e9704f 100644

--- a/arch/arm64/include/asm/thread_info.h

+++ b/arch/arm64/include/asm/thread_info.h

@@ -50,6 +50,7 @@ struct thread_info {

struct exec_domain

*exec_domain;

/* execution domain */

struct restart_block

restart_block;

int

preempt_count;

/* 0 => preemptable, <0 => bug */

+

int

preempt_lazy_count;

/* 0 => preemptable, <0 => bug */

int

cpu;

/* cpu */

};

@@ -108,6 +109,7 @@ static inline struct thread_info *current_thread_info(void)

#define TIF_NEED_RESCHED

1

#define TIF_NOTIFY_RESUME

2

/* callback before returning to user */

#define TIF_FOREIGN_FPSTATE

3

/* CPU's FP state is not current's */

+#define TIF_NEED_RESCHED_LAZY

4

#define TIF_NOHZ

7

#define TIF_SYSCALL_TRACE

8

#define TIF_SYSCALL_AUDIT

9

@@ -124,6 +126,7 @@ static inline struct thread_info *current_thread_info(void)

#define _TIF_NEED_RESCHED

(1 << TIF_NEED_RESCHED)

#define _TIF_NOTIFY_RESUME

(1 << TIF_NOTIFY_RESUME)

#define _TIF_FOREIGN_FPSTATE

(1 << TIF_FOREIGN_FPSTATE)

+#define _TIF_NEED_RESCHED_LAZY

(1 << TIF_NEED_RESCHED_LAZY)

#define _TIF_NOHZ

(1 << TIF_NOHZ)

#define _TIF_SYSCALL_TRACE

(1 << TIF_SYSCALL_TRACE)

#define _TIF_SYSCALL_AUDIT

(1 << TIF_SYSCALL_AUDIT)

diff --git a/arch/arm64/include/asm/unistd.h b/arch/arm64/include/asm/unistd.h

index 6d2bf41..3bc498c 100644

--- a/arch/arm64/include/asm/unistd.h

+++ b/arch/arm64/include/asm/unistd.h

@@ -31,6 +31,9 @@

* Compat syscall numbers used by the AArch64 kernel.

*/

#define __NR_compat_restart_syscall

0

+#define __NR_compat_exit

1

+#define __NR_compat_read

3

+#define __NR_compat_write

4

#define __NR_compat_sigreturn

119

#define __NR_compat_rt_sigreturn

173

@@ -41,10 +44,13 @@

#define __ARM_NR_compat_cacheflush

(__ARM_NR_COMPAT_BASE+2)

#define __ARM_NR_compat_set_tls

(__ARM_NR_COMPAT_BASE+5)

-#define __NR_compat_syscalls

386

+#define __NR_compat_syscalls

388

#endif

#define __ARCH_WANT_SYS_CLONE

+

+#ifndef __COMPAT_SYSCALL_NR

#include <uapi/asm/unistd.h>

+#endif

#define NR_syscalls (__NR_syscalls)

diff --git a/arch/arm64/include/asm/unistd32.h b/arch/arm64/include/asm/unistd32.h

index 9dfdac4..2f163ba 100644

--- a/arch/arm64/include/asm/unistd32.h

+++ b/arch/arm64/include/asm/unistd32.h

@@ -406,7 +406,7 @@ __SYSCALL(__NR_vfork, sys_vfork)

#define __NR_ugetrlimit 191

/* SuS compliant getrlimit */

__SYSCALL(__NR_ugetrlimit, compat_sys_getrlimit)

/* SuS compliant getrlimit */

#define __NR_mmap2 192

-__SYSCALL(__NR_mmap2, sys_mmap_pgoff)

+__SYSCALL(__NR_mmap2, compat_sys_mmap2_wrapper)

#define __NR_truncate64 193

__SYSCALL(__NR_truncate64, compat_sys_truncate64_wrapper)

#define __NR_ftruncate64 194

diff --git a/arch/arm64/kernel/Makefile b/arch/arm64/kernel/Makefile

index 5bd029b..50f1fe1 100644

--- a/arch/arm64/kernel/Makefile

+++ b/arch/arm64/kernel/Makefile

@@ -18,7 +18,7 @@ arm64-obj-y

:= cputable.o debug-monitors.o entry.o irq.o fpsimd.o

\

cpuinfo.o

arm64-obj-$(CONFIG_COMPAT)

+= sys32.o kuser32.o signal32.o

\

-

sys_compat.o

+

sys_compat.o entry32.o

arm64-obj-$(CONFIG_FUNCTION_TRACER)

+= ftrace.o entry-ftrace.o

arm64-obj-$(CONFIG_MODULES)

+= arm64ksyms.o module.o

arm64-obj-$(CONFIG_SMP)

+= smp.o smp_spin_table.o topology.o

diff --git a/arch/arm64/kernel/asm-offsets.c b/arch/arm64/kernel/asm-offsets.c

index 9a9fce0..f774136 100644

--- a/arch/arm64/kernel/asm-offsets.c

+++ b/arch/arm64/kernel/asm-offsets.c

@@ -36,6 +36,7 @@ int main(void)

BLANK();

DEFINE(TI_FLAGS,

offsetof(struct thread_info, flags));

DEFINE(TI_PREEMPT,

offsetof(struct thread_info, preempt_count));

+ DEFINE(TI_PREEMPT_LAZY,

offsetof(struct thread_info, preempt_lazy_count));

DEFINE(TI_ADDR_LIMIT,

offsetof(struct thread_info, addr_limit));

DEFINE(TI_TASK,

offsetof(struct thread_info, task));

DEFINE(TI_EXEC_DOMAIN,

offsetof(struct thread_info, exec_domain));

diff --git a/arch/arm64/kernel/entry.S b/arch/arm64/kernel/entry.S

index 726b910..303d756 100644

--- a/arch/arm64/kernel/entry.S

+++ b/arch/arm64/kernel/entry.S

@@ -349,7 +349,14 @@ el1_irq:

ldr

w24, [tsk, #TI_PREEMPT]

// get preempt count

cbnz

w24, 1f

// preempt count != 0

ldr

x0, [tsk, #TI_FLAGS]

// get flags

-

tbz

x0, #TIF_NEED_RESCHED, 1f

// needs rescheduling?

+

tbz

x0, #TIF_NEED_RESCHED, loop2

// needs rescheduling?

+

bl

el1_preempt

+

+loop2:

+

ldr

w24, [tsk, #TI_PREEMPT_LAZY]

// get preempt lazy count

+

cbnz

w24, 1f

// preempt lazy count != 0

+

+

tbz

x0, #_TIF_NEED_RESCHED_LAZY, 1f

// needs rescheduling?

bl

el1_preempt

1:

#endif

@@ -365,6 +372,7 @@ el1_preempt:

1:

bl

preempt_schedule_irq

// irq en/disable is done inside

ldr

x0, [tsk, #TI_FLAGS]

// get new tasks TI_FLAGS

tbnz

x0, #TIF_NEED_RESCHED, 1b

// needs rescheduling?

+

tbnz

x0, #_TIF_NEED_RESCHED_LAZY, 1b

// needs rescheduling as per lazy?

ret

x24

#endif

@@ -433,7 +441,7 @@ el0_svc_compat:

/*

* AArch32 syscall handling

*/

-

adr

stbl, compat_sys_call_table

// load compat syscall table pointer

+

adrp

stbl, compat_sys_call_table

// load compat syscall table pointer

uxtw

scno, w7

// syscall number in w7 (r7)

mov sc_nr, #__NR_compat_syscalls

b

el0_svc_naked

@@ -601,8 +609,12 @@ fast_work_pending:

str

x0, [sp, #S_X0]

// returned x0

work_pending:

tbnz

x1, #TIF_NEED_RESCHED, work_resched

+

ldr

x2, [tsk, #TI_PREEMPT_LAZY]

// get preempt lazy count

+

cbnz

x2, loop3

// preempt lazy count != 0

+

+

tbnz

x1, #_TIF_NEED_RESCHED_LAZY, work_resched

// needs rescheduling?

/* TIF_SIGPENDING, TIF_NOTIFY_RESUME or TIF_FOREIGN_FPSTATE case */

-

ldr

x2, [sp, #S_PSTATE]

+loop3:

ldr

x2, [sp, #S_PSTATE]

mov

x0, sp

// 'regs'

tst

x2, #PSR_MODE_MASK

// user mode regs?

b.ne

no_work_pending

// returning to kernel

diff --git a/arch/arm64/kernel/entry32.S b/arch/arm64/kernel/entry32.S

new file mode 100644

index 0000000..17f3296

--- /dev/null

+++ b/arch/arm64/kernel/entry32.S

@@ -0,0 +1,123 @@

+/*

+ * Compat system call wrappers

+ *

+ * Copyright (C) 2012 ARM Ltd.

+ * Authors: Will Deacon <will.deacon@arm.com>

+ *

Catalin Marinas <catalin.marinas@arm.com>

+ *

+ * This program is free software; you can redistribute it and/or modify

+ * it under the terms of the GNU General Public License version 2 as

+ * published by the Free Software Foundation.

+ *

+ * This program is distributed in the hope that it will be useful,

+ * but WITHOUT ANY WARRANTY; without even the implied warranty of

+ * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

+ * GNU General Public License for more details.

+ *

+ * You should have received a copy of the GNU General Public License

+ * along with this program. If not, see <http://www.gnu.org/licenses/>.

+ */

+

+#include <linux/linkage.h>

+#include <linux/const.h>

+

+#include <asm/assembler.h>

+#include <asm/asm-offsets.h>

+#include <asm/errno.h>

+#include <asm/page.h>

+

+/*

+ * System call wrappers for the AArch32 compatibility layer.

+ */

+

+ENTRY(compat_sys_sigreturn_wrapper)

+

mov

x0, sp

+

mov

x27, #0

// prevent syscall restart handling (why)

+

b

compat_sys_sigreturn

+ENDPROC(compat_sys_sigreturn_wrapper)

+

+ENTRY(compat_sys_rt_sigreturn_wrapper)

+

mov

x0, sp

+

mov

x27, #0

// prevent syscall restart handling (why)

+

b

compat_sys_rt_sigreturn

+ENDPROC(compat_sys_rt_sigreturn_wrapper)

+

+ENTRY(compat_sys_statfs64_wrapper)

+

mov

w3, #84

+

cmp

w1, #88

+

csel

w1, w3, w1, eq

+

b

compat_sys_statfs64

+ENDPROC(compat_sys_statfs64_wrapper)

+

+ENTRY(compat_sys_fstatfs64_wrapper)

+

mov

w3, #84

+

cmp

w1, #88

+

csel

w1, w3, w1, eq

+

b

compat_sys_fstatfs64

+ENDPROC(compat_sys_fstatfs64_wrapper)

+

+/*

+ * Note: off_4k (w5) is always units of 4K. If we can't do the requested

+ * offset, we return EINVAL.

+ */

+#if PAGE_SHIFT > 12

+ENTRY(compat_sys_mmap2_wrapper)

+

tst

w5, #~PAGE_MASK >> 12

+

b.ne

1f

+

lsr

w5, w5, #PAGE_SHIFT - 12

+

b

sys_mmap_pgoff

+1:

mov

x0, #-EINVAL

+

ret

lr

+ENDPROC(compat_sys_mmap2_wrapper)

+#endif

+

+/*

+ * Wrappers for AArch32 syscalls that either take 64-bit parameters

+ * in registers or that take 32-bit parameters which require sign

+ * extension.

+ */

+ENTRY(compat_sys_pread64_wrapper)

+

regs_to_64

x3, x4, x5

+

b

sys_pread64

+ENDPROC(compat_sys_pread64_wrapper)

+

+ENTRY(compat_sys_pwrite64_wrapper)

+

regs_to_64

x3, x4, x5

+

b

sys_pwrite64

+ENDPROC(compat_sys_pwrite64_wrapper)

+

+ENTRY(compat_sys_truncate64_wrapper)

+

regs_to_64

x1, x2, x3

+

b

sys_truncate

+ENDPROC(compat_sys_truncate64_wrapper)

+

+ENTRY(compat_sys_ftruncate64_wrapper)

+

regs_to_64

x1, x2, x3

+

b

sys_ftruncate

+ENDPROC(compat_sys_ftruncate64_wrapper)

+

+ENTRY(compat_sys_readahead_wrapper)

+

regs_to_64

x1, x2, x3

+

mov

w2, w4

+

b

sys_readahead

+ENDPROC(compat_sys_readahead_wrapper)

+

+ENTRY(compat_sys_fadvise64_64_wrapper)

+

mov

w6, w1

+

regs_to_64

x1, x2, x3

+

regs_to_64

x2, x4, x5

+

mov

w3, w6

+

b

sys_fadvise64_64

+ENDPROC(compat_sys_fadvise64_64_wrapper)

+

+ENTRY(compat_sys_sync_file_range2_wrapper)

+

regs_to_64

x2, x2, x3

+

regs_to_64

x3, x4, x5

+

b

sys_sync_file_range2

+ENDPROC(compat_sys_sync_file_range2_wrapper)

+

+ENTRY(compat_sys_fallocate_wrapper)

+

regs_to_64

x2, x2, x3

+

regs_to_64

x3, x4, x5

+

b

sys_fallocate

+ENDPROC(compat_sys_fallocate_wrapper)

diff --git a/arch/arm64/kernel/head.S b/arch/arm64/kernel/head.S

index 0a6e4f9..34abf21 100644

--- a/arch/arm64/kernel/head.S

+++ b/arch/arm64/kernel/head.S

@@ -43,7 +43,7 @@

#elif (PAGE_OFFSET & 0x1fffff) != 0

#error PAGE_OFFSET must be at least 2MB aligned

#elif TEXT_OFFSET > 0x1fffff

-#error TEXT_OFFSET must be less than 2MB

+#error TEXT_OFFSET must be less than 2MB

#endif

.macro

pgtbl, ttb0, ttb1, virt_to_phys

diff --git a/arch/arm64/kernel/process.c b/arch/arm64/kernel/process.c

index fde9923..1a3ac52 100644

--- a/arch/arm64/kernel/process.c

+++ b/arch/arm64/kernel/process.c

@@ -368,6 +368,47 @@ unsigned long arch_align_stack(unsigned long sp)

return sp & ~0xf;

}

+/*

+ * CONFIG_SPLIT_PTLOCK_CPUS results in a page->ptl lock. If the lock is not

+ * initialized by pgtable_page_ctor() then a coredump of the vector page will

+ * fail.

+ */

+

+static int __init vectors_user_mapping_init_page(void)

+{

+ pgd_t *pgd;

+ pud_t *pud;

+ pmd_t *pmd;

+ struct page *page;

+

unsigned long addr = UL(0xffffffffffffffff);

+

+ if ((((long)addr) >> VA_BITS) != -1UL)

+ return 0;

+

+ pgd = pgd_offset_k(addr);

+ if (pgd_none(*pgd))

+ return 0;

+

+ pud = pud_offset(pgd, addr);

+ if (pud_none(*pud))

+ return 0;

+

+ if (pud_sect(*pud))

+ return pfn_valid(pud_pfn(*pud));

+

+ pmd = pmd_offset(pud, addr);

+ if (pmd_none(*pmd))

+ return 0;

+

+ page = pmd_page(*(pmd));

+

+

pgtable_page_ctor(page);

+

+ return 0;

+}

+late_initcall(vectors_user_mapping_init_page);

+

+

static unsigned long randomize_base(unsigned long base)

{

unsigned long range_end = base + (STACK_RND_MASK << PAGE_SHIFT) + 1;

diff --git a/arch/arm64/kernel/sys.c b/arch/arm64/kernel/sys.c

index 3fa98ff..b2a6508 100644

--- a/arch/arm64/kernel/sys.c

+++ b/arch/arm64/kernel/sys.c

@@ -39,9 +39,9 @@ asmlinkage long sys_mmap(unsigned long addr, unsigned long len,

/*

* Wrappers to pass the pt_regs argument.

*/

+asmlinkage long sys_rt_sigreturn_wrapper(void);

#define sys_rt_sigreturn

sys_rt_sigreturn_wrapper

-#include <asm/syscalls.h>

#undef __SYSCALL

#define __SYSCALL(nr, sym)

[nr] = sym,

@@ -50,7 +50,7 @@ asmlinkage long sys_mmap(unsigned long addr, unsigned long len,

* The sys_call_table array must be 4K aligned to be accessible from

* kernel/entry.S.

*/

-void *sys_call_table[__NR_syscalls] __aligned(4096) = {

+void * const sys_call_table[__NR_syscalls] __aligned(4096) = {

[0 ... __NR_syscalls - 1] = sys_ni_syscall,

#include <asm/unistd.h>

};

diff --git a/arch/arm64/kernel/sys32.S b/arch/arm64/kernel/sys32.S

deleted file mode 100644

index 423a5b3..0000000

--- a/arch/arm64/kernel/sys32.S

+++ /dev/null

@@ -1,115 +0,0 @@

-/*

- * Compat system call wrappers

- *

- * Copyright (C) 2012 ARM Ltd.

- * Authors: Will Deacon <will.deacon@arm.com>

- *

Catalin Marinas <catalin.marinas@arm.com>

- *

- * This program is free software; you can redistribute it and/or modify

- * it under the terms of the GNU General Public License version 2 as

- * published by the Free Software Foundation.

- *

- * This program is distributed in the hope that it will be useful,

- * but WITHOUT ANY WARRANTY; without even the implied warranty of

- * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

- * GNU General Public License for more details.

- *

- * You should have received a copy of the GNU General Public License

- * along with this program. If not, see <http://www.gnu.org/licenses/>.

- */

-

-#include <linux/linkage.h>

-

-#include <asm/assembler.h>

-#include <asm/asm-offsets.h>

-

-/*

- * System call wrappers for the AArch32 compatibility layer.

- */

-

-compat_sys_sigreturn_wrapper:

-

mov

x0, sp

-

mov

x27, #0

// prevent syscall restart handling (why)

-

b

compat_sys_sigreturn

-ENDPROC(compat_sys_sigreturn_wrapper)

-

-compat_sys_rt_sigreturn_wrapper:

-

mov

x0, sp

-

mov

x27, #0

// prevent syscall restart handling (why)

-

b

compat_sys_rt_sigreturn

-ENDPROC(compat_sys_rt_sigreturn_wrapper)

-

-compat_sys_statfs64_wrapper:

-

mov

w3, #84

-

cmp

w1, #88

-

csel

w1, w3, w1, eq

-

b

compat_sys_statfs64

-ENDPROC(compat_sys_statfs64_wrapper)

-

-compat_sys_fstatfs64_wrapper:

-

mov

w3, #84

-

cmp

w1, #88

-

csel

w1, w3, w1, eq

-

b

compat_sys_fstatfs64

-ENDPROC(compat_sys_fstatfs64_wrapper)

-

-/*

- * Wrappers for AArch32 syscalls that either take 64-bit parameters

- * in registers or that take 32-bit parameters which require sign

- * extension.

- */

-compat_sys_pread64_wrapper:

-

regs_to_64

x3, x4, x5

-

b

sys_pread64

-ENDPROC(compat_sys_pread64_wrapper)

-

-compat_sys_pwrite64_wrapper:

-

regs_to_64

x3, x4, x5

-

b

sys_pwrite64

-ENDPROC(compat_sys_pwrite64_wrapper)

-

-compat_sys_truncate64_wrapper:

-

regs_to_64

x1, x2, x3

-

b

sys_truncate

-ENDPROC(compat_sys_truncate64_wrapper)

-

-compat_sys_ftruncate64_wrapper:

-

regs_to_64

x1, x2, x3

-

b

sys_ftruncate

-ENDPROC(compat_sys_ftruncate64_wrapper)

-

-compat_sys_readahead_wrapper:

-

regs_to_64

x1, x2, x3

-

mov

w2, w4

-

b

sys_readahead

-ENDPROC(compat_sys_readahead_wrapper)

-

-compat_sys_fadvise64_64_wrapper:

-

mov

w6, w1

-

regs_to_64

x1, x2, x3

-

regs_to_64

x2, x4, x5

-

mov

w3, w6

-

b

sys_fadvise64_64

-ENDPROC(compat_sys_fadvise64_64_wrapper)

-

-compat_sys_sync_file_range2_wrapper:

-

regs_to_64

x2, x2, x3

-

regs_to_64

x3, x4, x5

-

b

sys_sync_file_range2

-ENDPROC(compat_sys_sync_file_range2_wrapper)

-

-compat_sys_fallocate_wrapper:

-

regs_to_64

x2, x2, x3

-

regs_to_64

x3, x4, x5

-

b

sys_fallocate

-ENDPROC(compat_sys_fallocate_wrapper)

-

-#undef __SYSCALL

-#define __SYSCALL(x, y)

.quad

y

// x

-

-/*

- * The system calls table must be 4KB aligned.

- */

-

.align

12

-ENTRY(compat_sys_call_table)

-#include <asm/unistd32.h>

diff --git a/arch/arm64/kernel/sys32.c b/arch/arm64/kernel/sys32.c

new file mode 100644

index 0000000..7800bb1

--- /dev/null

+++ b/arch/arm64/kernel/sys32.c

@@ -0,0 +1,57 @@

+/*

+ * arch/arm64/kernel/sys32.c

+ *

+ * Copyright (C) 2015 ARM Ltd.

+ *

+ * This program is free software(void); you can redistribute it and/or modify

+ * it under the terms of the GNU General Public License version 2 as

+ * published by the Free Software Foundation.

+ *

+ * This program is distributed in the hope that it will be useful,

+ * but WITHOUT ANY WARRANTY; without even the implied warranty of

+ * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

+ * GNU General Public License for more details.

+ *

+ * You should have received a copy of the GNU General Public License

+ * along with this program. If not, see <http(void);//www.gnu.org/licenses/>.

+ */

+

+/*

+ * Needed to avoid conflicting __NR_* macros between uapi/asm/unistd.h and

+ * asm/unistd32.h.

+ */

+#define __COMPAT_SYSCALL_NR

+

+#include <linux/compiler.h>

+#include <linux/syscalls.h>

+#include <asm/page.h>

+

+asmlinkage long compat_sys_sigreturn_wrapper(void);

+asmlinkage long compat_sys_rt_sigreturn_wrapper(void);

+asmlinkage long compat_sys_statfs64_wrapper(void);

+asmlinkage long compat_sys_fstatfs64_wrapper(void);

+asmlinkage long compat_sys_pread64_wrapper(void);

+asmlinkage long compat_sys_pwrite64_wrapper(void);

+asmlinkage long compat_sys_truncate64_wrapper(void);

+asmlinkage long compat_sys_ftruncate64_wrapper(void);

+asmlinkage long compat_sys_readahead_wrapper(void);

+asmlinkage long compat_sys_fadvise64_64_wrapper(void);

+asmlinkage long compat_sys_sync_file_range2_wrapper(void);

+asmlinkage long compat_sys_fallocate_wrapper(void);

+#if PAGE_SHIFT > 12

+asmlinkage long compat_sys_mmap2_wrapper(void);

+#else

+#define compat_sys_mmap2_wrapper sys_mmap_pgoff

+#endif

+

+#undef __SYSCALL

+#define __SYSCALL(nr, sym)

[nr] = sym,

+

+/*

+ * The sys_call_table array must be 4K aligned to be accessible from

+ * kernel/entry.S.

+ */

+void * const compat_sys_call_table[__NR_compat_syscalls] __aligned(4096) = {

+

[0 ... __NR_compat_syscalls - 1] = sys_ni_syscall,

+#include <asm/unistd32.h>

+};

diff --git a/arch/arm64/mm/fault.c b/arch/arm64/mm/fault.c

index 41cb6d3..e82c79e 100644

--- a/arch/arm64/mm/fault.c

+++ b/arch/arm64/mm/fault.c

@@ -211,7 +211,7 @@ static int __kprobes do_page_fault(unsigned long addr, unsigned int esr,

* If we're in an interrupt or have no user context, we must not take

* the fault.

*/

-

if (in_atomic() || !mm)

+

if (!mm || pagefault_disabled())

goto no_context;

if (user_mode(regs))

@@ -358,6 +358,8 @@ static int __kprobes do_translation_fault(unsigned long addr,

if (addr < TASK_SIZE)

return do_page_fault(addr, esr, regs);

+

if (interrupts_enabled(regs))

+

local_irq_enable();

do_bad_area(addr, esr, regs);

return 0;

}

diff --git a/include/linux/compat.h b/include/linux/compat.h

index e649426..ab25814 100644

--- a/include/linux/compat.h

+++ b/include/linux/compat.h

@@ -357,6 +357,9 @@ asmlinkage long compat_sys_lseek(unsigned int, compat_off_t, unsigned int);

asmlinkage long compat_sys_execve(const char __user *filename, const compat_uptr_t __user *argv,

const compat_uptr_t __user *envp);

+asmlinkage long compat_sys_execveat(int dfd, const char __user *filename,

+

const compat_uptr_t __user *argv,

+

const compat_uptr_t __user *envp, int flags);

asmlinkage long compat_sys_select(int n, compat_ulong_t __user *inp,

compat_ulong_t __user *outp, compat_ulong_t __user *exp,

@@ -686,6 +689,15 @@ asmlinkage long compat_sys_sendfile64(int out_fd, int in_fd,

asmlinkage long compat_sys_sigaltstack(const compat_stack_t __user *uss_ptr,

compat_stack_t __user *uoss_ptr);

+#ifdef __ARCH_WANT_SYS_SIGPENDING

+asmlinkage long compat_sys_sigpending(compat_old_sigset_t __user *set);

+#endif

+

+#ifdef __ARCH_WANT_SYS_SIGPROCMASK

+asmlinkage long compat_sys_sigprocmask(int how, compat_old_sigset_t __user *nset,

+

compat_old_sigset_t __user *oset);

+#endif

+

int compat_restore_altstack(const compat_stack_t __user *uss);

int __compat_save_altstack(compat_stack_t __user *, unsigned long);

#define compat_save_altstack_ex(uss, sp) do { \

diff --git a/include/linux/syscalls.h b/include/linux/syscalls.h

index bda9b81..96b2ded 100644

--- a/include/linux/syscalls.h

+++ b/include/linux/syscalls.h

@@ -410,12 +410,16 @@ asmlinkage long sys_newlstat(const char __user *filename,

struct stat __user *statbuf);

asmlinkage long sys_newfstat(unsigned int fd, struct stat __user *statbuf);

asmlinkage long sys_ustat(unsigned dev, struct ustat __user *ubuf);

-#if BITS_PER_LONG == 32

+#if defined(__ARCH_WANT_STAT64) || defined(__ARCH_WANT_COMPAT_STAT64)

asmlinkage long sys_stat64(const char __user *filename,

struct stat64 __user *statbuf);

asmlinkage long sys_fstat64(unsigned long fd, struct stat64 __user *statbuf);

asmlinkage long sys_lstat64(const char __user *filename,

struct stat64 __user *statbuf);

+asmlinkage long sys_fstatat64(int dfd, const char __user *filename,

+

struct stat64 __user *statbuf, int flag);

+#endif

+#if BITS_PER_LONG == 32

asmlinkage long sys_truncate64(const char __user *path, loff_t length);

asmlinkage long sys_ftruncate64(unsigned int fd, loff_t length);

#endif

@@ -771,8 +775,6 @@ asmlinkage long sys_openat(int dfd, const char __user *filename, int flags,

umode_t mode);

asmlinkage long sys_newfstatat(int dfd, const char __user *filename,

struct stat __user *statbuf, int flag);

-asmlinkage long sys_fstatat64(int dfd, const char __user *filename,

-

struct stat64 __user *statbuf, int flag);

asmlinkage long sys_readlinkat(int dfd, const char __user *path, char __user *buf,

int bufsiz);

asmlinkage long sys_utimensat(int dfd, const char __user *filename,

--

1.9.1

After few days , i could see the patch discussion at linux mailing list for the same.

The change i could see is , i used non zero comparable assembly code and in mailing list they used zero comparable assembly code.

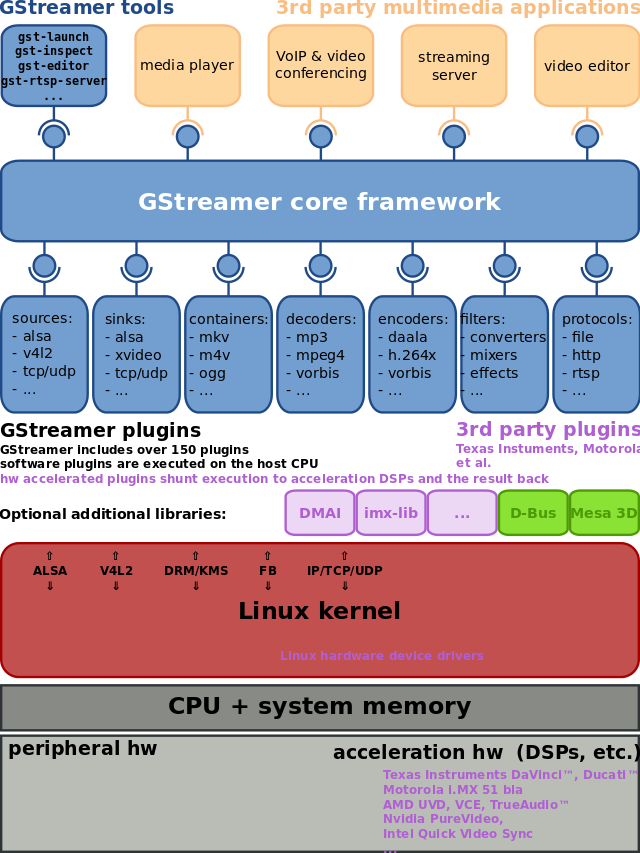

Cyclic test latency diagram with this change: